Building an iOS App to Serve a Fine-Tuned Llama Model with Union and MLC-LLM

So, I had this idea the other day: What if I could get ChatGPT-like AI to run on my iPhone and speak to me in my native language, Telugu? Not through some API or cloud service, but actually running directly on my phone.

While existing foundation models work well to a certain extent, they aren't particularly fluent in languages other than English. To address this, I’d need to fine-tune an LLM with a dataset of Telugu prompts and responses, package it up, and turn it into an app.

We all know LLMs are demanding when it comes to computing power. They need a lot of resources—whether it’s GPUs, CPUs, or memory—to generate predictions. The reason is simple: even producing a single sentence often requires multiple forward passes through the model, and these models typically have a massive number of parameters.

And I want this app to run on what we call "edge devices." If you're not familiar, edge devices are hardware that operates closer to the end-user or the source of data—like smartphones, tablets, or IoT devices.

In this post, I’ll walk you through how I fine-tuned a Llama 3 8B Instruct model on an A100 using Union Serverless, and then got it to run as an iOS app with the help of MLC-LLM—all at no cost, as Union Serverless offers $30 in free credits!

Fine-tuning Llama 3 on Cohere Aya

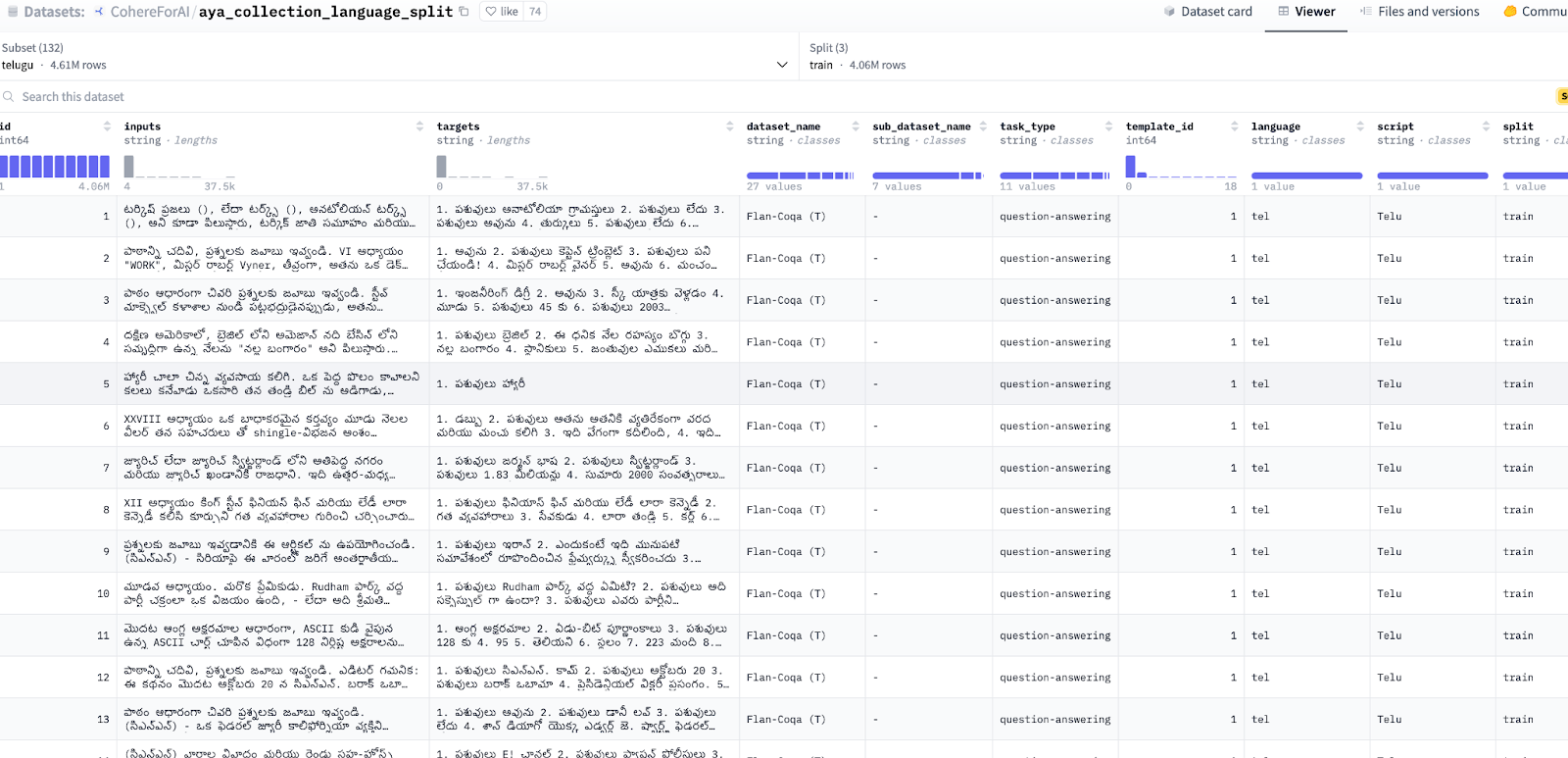

First things first: we need to download the Llama 3 8B Instruct model from the Hugging Face hub, along with the Cohere Aya dataset, which contains a diverse collection of prompts and completions across multiple languages.

These tasks need to be set up to cache the model and dataset, so we won’t have to download them again in future runs.

Next up, we’ll define a task to fine-tune our model. For this, we’ll leverage Quantized Low-Rank Adapters (QLoRA), which is a fantastic method for speeding up the fine-tuning process while using less memory. We’ll be using an A100 GPU, available on Union Serverless, to handle the heavy lifting.

To keep track of the training process, we’ll set up Weights and Biases, which will help us monitor the model’s performance throughout the fine-tuning process. Once we’re done, we’ll save the adapters and return them as a `FlyteDirectory`. For fine-tuning, we’re starting with 1,000 samples.

Next, we’ll merge the adapter with the original base model, which will allow us to serve the fully integrated model as an iOS app later on. It’s worth noting that we’re using an L4 GPU for this step since the A100 isn't necessary just for merging the models. One of the great features of Union Serverless is how easy it is to switch between GPUs, making it super convenient!

Once the task is complete, it returns an Artifact that includes both the model and the dataset partitions. This means you can easily retrieve the merged model artifact later in other workflows—simply query the artifact by providing the names of the dataset and the model.

Converting model weights for MLC compatibility

MLC-LLM is a powerful ML compiler and high-performance deployment engine designed specifically for LLMs. It allows you to deploy your models across various platforms, including iOS, Android, web browsers, and even as a Python or REST API.

To get our merged model up and running with MLC-LLM, we first need to convert the model weights into the MLC format. This is a straightforward process that involves running two commands: `mlc_llm convert_weight` to transform the weights into the MLC format, and `mlc_llm` `gen_config` to generate the chat configuration and process the tokenizers.

Next, we’ll push the model to a Hugging Face repository to make it easily accessible and create a launch plan to execute the conversion workflow once the fine-tuned model artifact is generated.

To run the workflows on Union Serverless, you can find the necessary commands in the README file.

Building the iOS app

To build the iOS app, you’ll need to be working on macOS, as it comes with a few key dependencies that need to be installed first.

Here’s a script that handles all the setup for you.

Once everything is in place, here’s the code that runs behind the scenes to generate the iOS app:

The `mlc_llm package` command compiles the model, builds the runtime and tokenizer, and creates a `dist/` directory inside the `MLCChat` folder.

At this stage, we’re also bundling the model weights directly into the app to avoid having to download them from Hugging Face every time the app runs—this speeds things up considerably.

Next, we need to open `./ios/MLCChat/MLCChat.xcodeproj` using Xcode (make sure Xcode is installed and you’ve accepted its terms and conditions). Also, ensure you have an active Apple Developer account, as Xcode may prompt you to use your developer team credentials and set up a product bundle identifier.

If you’re just looking to test the app, follow these steps:

- Go to Product > Scheme > Edit Scheme and replace “Release” with “Debug” under “Run”.

- Skip adding developer certificates.

- Use this bundle identifier pattern: `com.yourname.MLCChat`.

- Remove the "Extended Virtual Addressing" capability under the Target section.

Check out the demo showcasing the iOS app I created during the 2024 Union Hackathon!

This is encouraging and likely means that a fine-tune on additional data samples would yield great results! You can check out the existing codebase on GitHub here.

Democratizing AI: Your turn to build

Model fine-tuning and deployment should be accessible to everyone, and Union combined with MLC-LLM makes this a reality. With Union, you can fine-tune your models, perform all necessary pre-processing, and generate MLC model artifacts ready for deployment on any platform. Union is an ideal choice for building end-to-end AI solutions, and we’re here to support you every step of the way!

To dive deeper into Union Serverless, check out the documentation. If you have any questions about Serverless, don’t hesitate to join our Slack community in the #union-serverless channel!

Can't wait to see what you'll build! 🚀