Consistently deliver high-throughput, reliable, and fault-tolerant inference for production-scale AI applications

Dynamically scale resources to optimize performance and minimize costs. Streamline the execution process, enabling consistent, reliable, and reproducible interface workflows.

Deliver high-throughput inference for demanding AI-enabled applications with GPUs and TPUs

Union’s distributed, scalable and elastic execution model optimally allocates and utilizes computational resources across nodes or clusters. Dynamically scale to adjust resource allocation based on workload fluctuations. Minimize costs while maintaining optimal performance during peak workload demand.

Guarantee reliability and availability of inference workflows

Get fault-tolerant execution of inference workflows to withstand infrastructure failures, transient network issues or data inconsistencies. Configure retry policies, error thresholds, and recovery strategies for individual tasks, allowing workflows to automatically retry failed tasks, recover from errors, and resume execution without manual intervention.

Create multi-model, multi-modal inference pipelines

Specify dependencies between tasks so that model inference tasks are executed only after prerequisite tasks, such as data preprocessing or model loading, have completed successfully. Implement advanced workflow patterns, such as branching, looping, and error handling, to effectively handle diverse inference scenarios and edge cases. Amortize the cost of ephemeral infrastructure by reusing hot environments across multiple executions using Actors framework (coming soon).

Simplify with a battle-tested, unified platform for end-to-end machine learning workflows

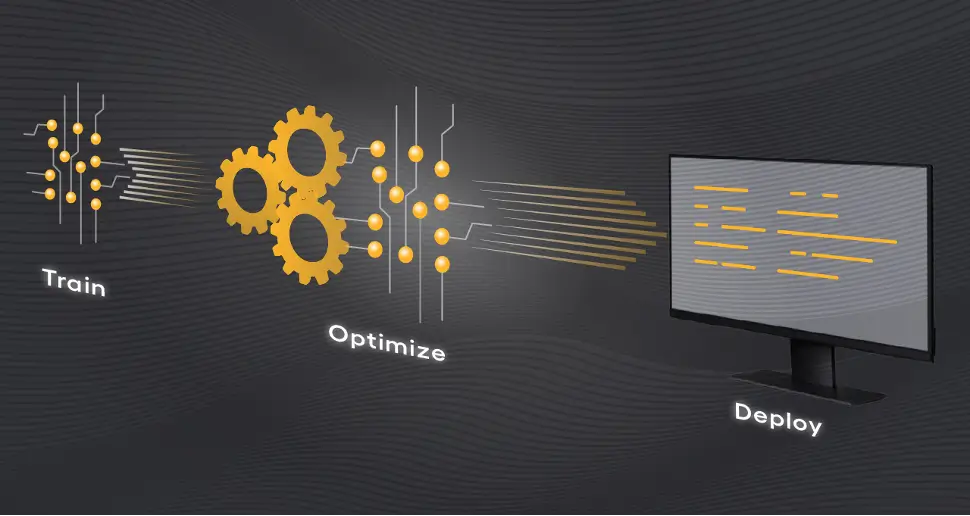

Integrate inference tasks into workflows for all stages of the machine learning lifecycle, from data preprocessing and model training to inference. Ensure consistency, traceability, and reproducibility across the entire workflow, simplifying workflow design, management, and maintenance.

Testimonials

“Our products are not powered by a single model but instead are a composite of many models in a larger inference pipeline. What we serve are AI pipelines, which are made of functions, some of which are AI models. Union is ideal for such inference pipelines.”

Resources