Pandera 0.17 Adds Support for Pydantic v2

I’m super excited to announce that Pandera now supports pydantic v2! 🎉

This version brings Pandera up to date with Pydantic. Back in June, Pydantic v2 came out, providing a 5-50x performance improvement over V1 and an overhauled architecture to improve extensibility and maintainability. (Read more about it here.)

Pandera schemas in a Pydantic model

Pandera has supported a Pydantic integration since version 0.8.0. If you have a `pydantic.BaseModel` that contains a pandas DataFrame attribute, you can do something like:

When you initialize `PydanticModel`, Pandera will be used under the hood to validate the incoming raw dataframe.

Using Pydantic models in Pandera schemas

If you want to reuse an existing Pydantic model definition that exists in your codebase, you can also define a `pandera.DataFrameModel` (or `DataFrameSchema`) that performs row-wise validation. Suppose that this is our Pydantic odel:

You can then use the `PydanticModel` type in your `DataFrameModel` definition:

This is logically equivalent to the following Pandera schema:

When Pandera uses the `PydanticModel` type to do row-wise validation, it first converts the dataframe into a list of dictionaries, where the dictionary is a record of each row. It then applies a dynamic Pydantic model that’s equivalent to:

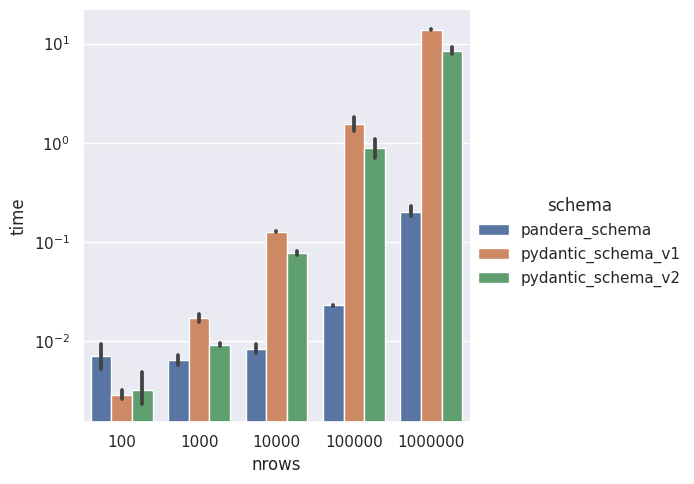

Benchmarking Pandera’s row-wise validation with Pydantic

Because Pandera validates DataFrames in their native format, it’s generally faster than validating a DataFrame via Pydantic because you don’t have to convert the DataFrame into another format that Pydantic can operate on, like a list of dictionaries. That said, when your data is fairly small it might be OK to use row-wise validation with the `PydanticModel` type if you don’t want to maintain two sets of schemas.

But does Pydantic v2 improve row-wise validation in Pandera? We did a quick benchmark of a fairly simple case to get a sense of this.

In the bar plot below, you can see that we validate a DataFrame of varying number of rows, indicated by `nrows` in the x-axis. To give you a sense of the overall performance differences across all of these conditions, we show the validation run-time in the y-axis in log scale: the takeaways are that Pandera performs better except for the 100-row DataFrame case and Pydantic v2 is faster than v1 (which has already been documented, for example, here and here). You can find the benchmark notebooks here:

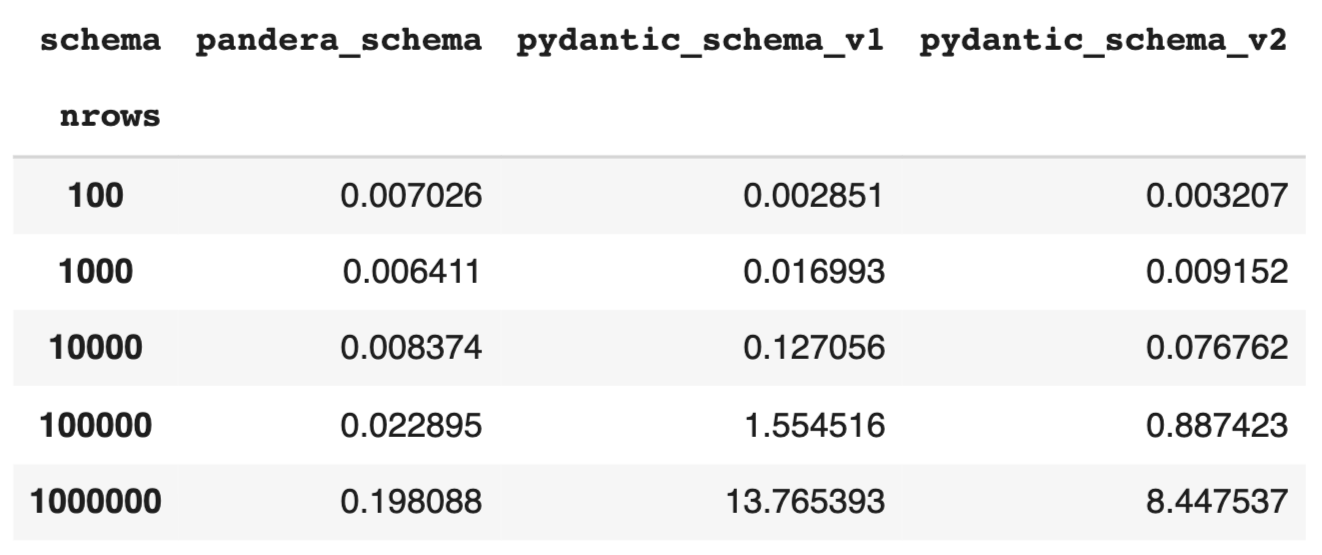

To give you a sense of how much faster Pydantic v2 is compared to v1 in the context of Pandera schema validation, let’s look at the table below, which contains validation wall-time averaged over ten runs. On average, Pandera schemas using Pydantic v2 run 1.5 - 1.75x faster than those using v1.

A better Pydantic integration would actually convert the Pydantic model to a native Pandera DataFrame so that we don’t have to suffer the DataFrame-to-List data conversion cost, but for many small-medium dataset sizes this conversion cost may be acceptable. If this performance enhancement is something that might interest you, feel free to open up a feature request.

Wrapping up

The 0.17 release also delivers bug fixes, improved docs and housekeeping changes; see the full changelog here.

What’s next for Pandera? Well, it might interest you that the community is starting to rally around the next big integration: support for Polars DataFrames 🐻❄️ 🔥. If you’re interested in getting involved or keeping abreast of changes, please head over to this issue (see the mini-roadmap in this comment and summary of major pieces of work here).

Thanks for reading, and if you’re new to Pandera, you can try it out quickly ▶️ here.