Cut OpenAI Batch Pipeline Costs by Over 50% with Zero Container Overhead

OpenAI real-time client is ideal for real-time responses, especially for use cases and needs where price is not a factor, which can be significant depending on the number of tokens. Instant results are often unnecessary, especially for tasks like summarizing data or running model evaluations. Developers can significantly reduce operational costs without compromising functionality by aggregating these tasks into batches and processing them asynchronously. That’s where batch processing comes in with the new OpenAI Batch API.

However, implementing a batch processing system comes with its own set of challenges. Coordinating data ingestion, managing processing queues, and orchestrating result delivery requires a robust infrastructure. Union makes things simple by helping you set up an end-to-end automated pipeline. It fetches data regularly, sends it for batch processing when needed, and notifies you when it’s done. Plus, forget about container hassles for batch polling loops, all thanks to the OpenAI Batch agent.

Union simplifies the implementation and operations of this end-to-end automated pipeline while also providing significant cost-efficiency. Most users see improved efficiency based on the following breakdown:

- OpenAI real-time client: 100% baseline cost

- OpenAI Batch client: 50% of baseline

- Flyte OpenAI Batch agent: 50% of baseline plus zero container overhead for batch status retrieval

With Union, you can integrate pre- and post-processing of data into a single pipeline. It transforms potential infrastructure bloat into pure, bottom-line savings. The result is a lean, resource-optimized pipeline that maximizes your cost savings while simplifying your infrastructure and operations.

OpenAI Batch agent

The OpenAI Batch API endpoint allows users to submit requests for asynchronous batch processing.

It provides results within 24 hours, and the model will be offered at a 50% discount. Batch API is useful when you don’t require immediate results, want to save costs, and get higher rate limits.

The Flyte OpenAI Batch agent is built on top of the Batch API. It automates the creation of a batch and real-time status polling. You can provide either a JSONL file or a JSON iterator (stream your JSONs), and the agent handles uploading to OpenAI, creating the batch, and downloading the output and error files. Furthermore, with the agent, zero costs are incurred during polling the batch status.

Union lets you integrate the agent into your workflow to automate the entire process associated with preparing the data, creating a batch, and sending the outputs to downstream tasks for further processing. Since Union is a perfect fit for both data and ML pipelines, the pre- and post-processing stages can be easily handled in a single, centralized pipeline.

Image moderation system

With the ever-increasing volume of user-generated content on the internet, content moderation has become a necessity. From social media platforms to e-commerce sites, ensuring that inappropriate or offensive content is filtered out is essential for ensuring a safe environment.

AI has significantly improved the content moderation process, making it more efficient and easier to implement.

Suppose you want to build an image moderation system that:

- Fetches images from a cloud blob storage every x hours,

- Triggers a downstream workflow to send requests to a GPT-4 model for image moderation, and

- Triggers another workflow to read the output and error files and send an email notification.

Union enables you to implement this seamlessly as follows:

- Define a launch plan with a cron schedule to fetch images every x hours.

- Use artifacts and reactive workflows to trigger downstream workflow.

- Send JSON requests to GPT-4 using the Flyte OpenAI Batch agent.

- Wait for the batch processing to complete.

- Send an email notification announcing the completion status.

Let’s take a look at how we can implement this in code.

First, let’s look at one way to organize this simple project.

This example uses a key Union design pattern called reactive workflow. Reactive workflows use artifacts, the versioned upstream workflow outputs, to trigger other downstream workflows (check out our blog post on reactive workflows here). Using reactive workflows, we can easily separate our upstream workflow in `fetch_images_wf.py` and our downstream workflow in `batch_wf.py` into different Python modules.

Image collection workflow

We will need our image collection workflow to produce an artifact containing a set of images that require further processing. Artifacts are first class entities of workflow outputs, allowing us to easily attach metadata through partitions, consume artifacts as input in other workflows using queries, and track the lineage of workflow output as it is passed through different workflows. We want our image collection workflow to create an `Artifact` if new images are present in our blob store. The `Artifact` will let us trigger a downstream workflow to create requests for our OpenAI Batch agent.

To start, we must fetch the images in our s3 bucket. We can use an existing Boto agent which is part of the Sagemaker integration with Flyte. Using the list_objects_v2 method, we can get the names of the images and the times they were added to the bucket (a maximum of 1000 objects can be retrieved). This will help us filter out old images that we don’t need to process.

Then, we structure a workflow like so, where `fetch_images` checks the result of the Boto Agent for new images, and a `conditional` is used to emit an `Artifact` we call `ImageDir` if there are new images in the bucket since the last time the workflow was run.

Taking a closer look, we use `list[FlyteFile]` to pass around the images we want to process.

To round off our upstream `fetch_images_wf` workflow, we create a `LaunchPlan` to execute our workflow at a desired schedule using `CronSchedule`.

Batch request workflow

Our second workflow creates and sends requests to the OpenAI API. This is downstream of our image collection workflow and contains two steps; creating a JSON Lines request for the Flyte OpenAI Batch agent, and calling the agent.

This task simply loops through the images we need to process and yields requests to send to the OpenAI Batch API.

Next, we define a trigger on the `ImageDir` artifact that automatically executes the workflow for OpenAI batch processing. The batch workflow accepts `jsonl_in` as a runtime parameter, which we provide by sending the JSON generator returned from the `create_request` task.

It is also worth noting the use of `notification` in the `LaunchPlan`. These few lines allow us to send email or Slack messages upon the successful completion of the downstream workflow. This is very handy given the fact that the OpenAI Batch may take quite a while to return any completion results; we don’t want to constantly check the status of our request.

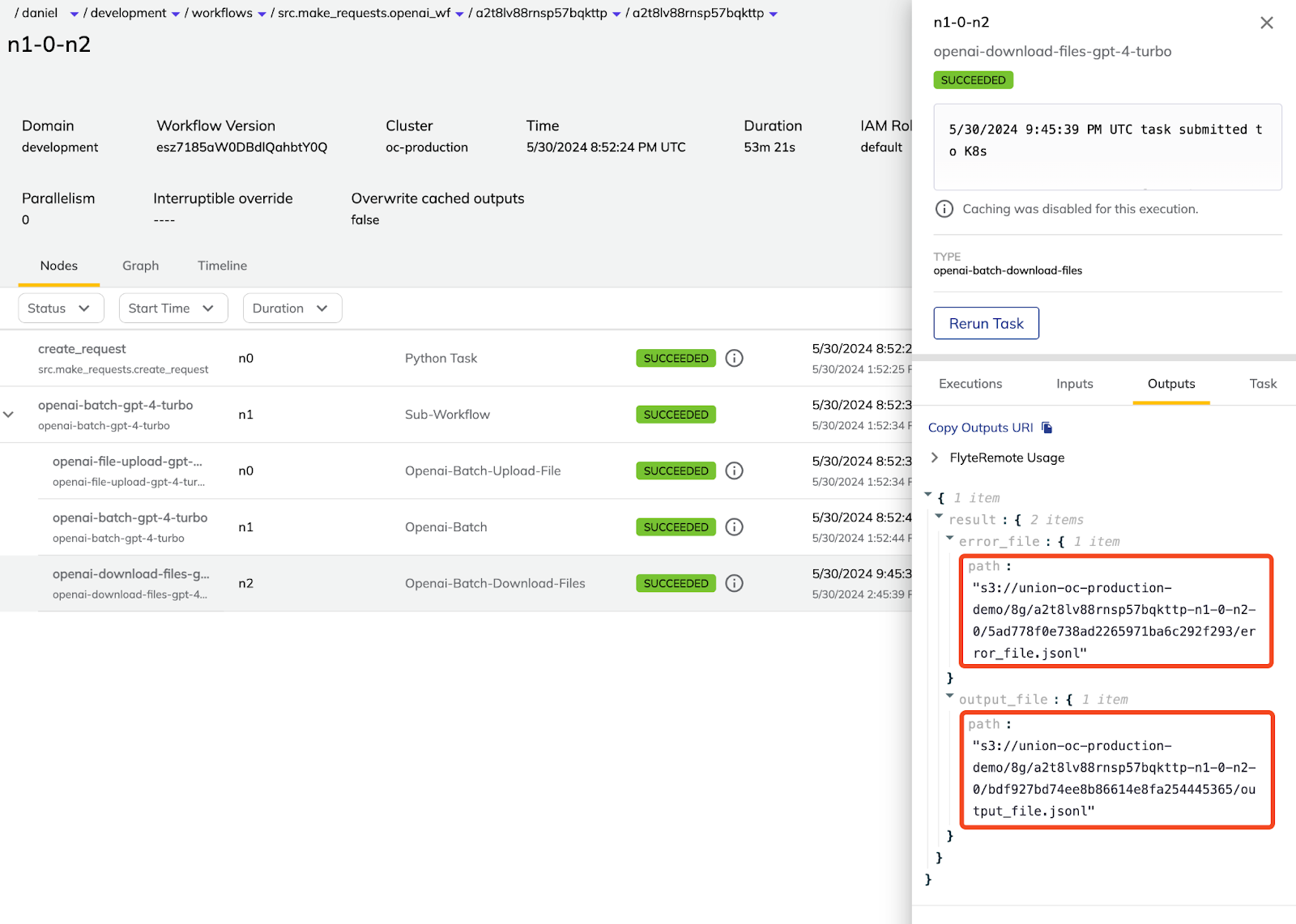

Navigating to the execution link, we can see that the execution took 53 minutes to complete and returns S3 URIs to successful and failed batch jobs.

Here are some example responses returned by the OpenAI Batch API:

Leveraging the long-running, stateless, and locally testable Boto and OpenAI Batch agents, we could make requests to external resources in a simple yet cost effective way. These processes were decoupled and automated through launch plan schedules, artifacts, and reactive workflows, all while maintaining a clear record of data lineage. The end-to-end example is on GitHub.

Build efficient batch inference pipelines

With the addition of the OpenAI Batch agent into Union, you can build end-to-end batch inference pipelines with pre- and post-processing included. This integration also helps you save costs in two key ways:

- Models are offered at a 50% discount.

- There are no additional costs incurred when polling the batch status using Union.

If this interests you, check out our documentation. The agent will soon be available on Union serverless! Contact the Union team if you're interested in implementing batch inference solutions.